kubesphere 部署高可用k8s集群

一、 环境准备

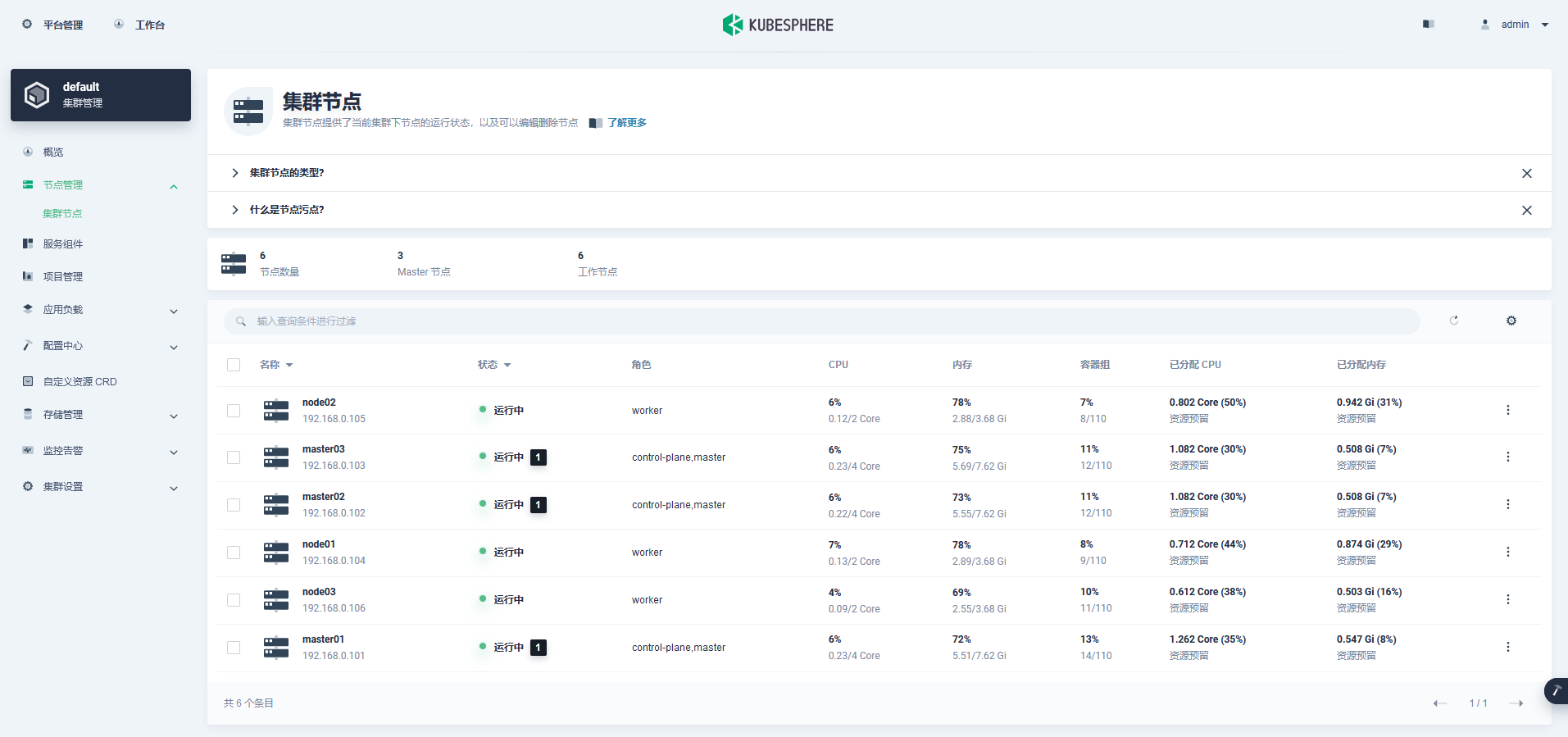

| IP | hostname | configuration | role |

|---|---|---|---|

| 192.168.0.97 | lvs01 | 2核4G | keepalived master |

| 192.168.0.98 | lvs02 | 2核4G | keepalived backup |

| 192.168.0.99 | vip | 2核4G | vip |

| 192.168.0.101 | master01 | 4核8G | kk |

| 192.168.0.102 | master02 | 4核8G | docker-ce |

| 192.168.0.103 | master03 | 4核8G | docker-ce |

| 192.168.0.104 | node01 | 2核4G | docker-ce |

| 192.168.0.105 | node02 | 2核4G | docker-ce |

| 192.168.0.106 | node03 | 2核4G | docker-ce |

kk的部署操作都在master01 上面完成,要求master01 必须可以通过ssh 免密码登陆到其他所有节点。

二、 keepalive + nginx 高可用配置

yum -y install nginx keepalived

yum -y install nginx-all-modules.noarchkeepalved MASTER 配置文件 keepalived.conf

global_defs {

notification_email {

934588176@qq.com

}

notification_email_from sns-lvs@gmail.com

smtp_server smtp.hysec.com

smtp_connection_timeout 30

router_id nginx_master # 设置nginx master的id,在一个网络应该是唯一的

}

vrrp_script chk_http_port {

script "/usr/local/src/check_nginx_pid.sh" #最后手动执行下此脚本,以确保此脚本能够正常执行

interval 2 #(检测脚本执行的间隔,单位是秒)

weight 2

}

vrrp_instance VI_1 {

state MASTER # 指定keepalived的角色,MASTER为主,BACKUP为备

interface ens33 # 当前进行vrrp通讯的网络接口卡(当前centos的网卡)

virtual_router_id 66 # 虚拟路由编号,主从要一直

priority 100 # 优先级,数值越大,获取处理请求的优先级越高

advert_int 1 # 检查间隔,默认为1s(vrrp组播周期秒数)

authentication {

auth_type PASS

auth_pass 1111

}

track_script {

chk_http_port #(调用检测脚本)

}

virtual_ipaddress {

192.168.0.99 # 定义虚拟ip(VIP),可多设,每行一个

}

}keepalved BACKUP 配置文件 keepalived.conf

global_defs {

notification_email {

934588176@qq.com

}

notification_email_from sns-lvs@gmail.com

smtp_server smtp.hysec.com

smtp_connection_timeout 30

router_id nginx_master # 设置nginx master的id,在一个网络应该是唯一的

}

vrrp_script chk_http_port {

script "/usr/local/src/check_nginx_pid.sh" #最后手动执行下此脚本,以确保此脚本能够正常执行

interval 2 #(检测脚本执行的间隔,单位是秒)

weight 2

}

vrrp_instance VI_1 {

state BACKUP # 指定keepalived的角色,MASTER为主,BACKUP为备

interface ens33 # 当前进行vrrp通讯的网络接口卡(当前centos的网卡)

virtual_router_id 66 # 虚拟路由编号,主从要一直

priority 99 # 优先级,数值越大,获取处理请求的优先级越高

advert_int 1 # 检查间隔,默认为1s(vrrp组播周期秒数)

authentication {

auth_type PASS

auth_pass 1111

}

track_script {

chk_http_port #(调用检测脚本)

}

virtual_ipaddress {

192.168.0.99 # 定义虚拟ip(VIP),可多设,每行一个

}

}/usr/local/src/check_nginx_pid.sh

#!/bin/bash

A=`ps -C nginx --no-header |wc -l`

if [ $A -eq 0 ];then

systemctl start nginx #重启nginx

if [ `ps -C nginx --no-header |wc -l` -eq 0 ];then #nginx重启失败

exit 1

else

exit 0

fi

else

exit 0

fi启动keepalived

systemctl start keepalived

systemctl enable keepalivednginx.conf 配置,主要是stream 段的配置

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log notice;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

stream {

log_format proxy '$time_local|$remote_addr|$upstream_addr|$protocol|$status|'

'$session_time|$upstream_connect_time|$bytes_sent|$bytes_received|'

'$upstream_bytes_sent|$upstream_bytes_received' ;

upstream api_server {

least_conn;

server 192.168.0.101:6443 max_fails=3 fail_timeout=5s;

server 192.168.0.102:6443 max_fails=3 fail_timeout=5s;

server 192.168.0.103:6443 max_fails=3 fail_timeout=5s;

}

server {

listen 6443;

proxy_pass api_server;

access_log /var/log/nginx/proxy.log proxy;

}

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

#tcp_nopush on;

keepalive_timeout 65;

#gzip on;

include /etc/nginx/conf.d/*.conf;

}启动nginx

systemctl start nginx

systemctl enable nginx因为nginx 和 keepalived 配置相对简单所以这里不过多展示,只把上面关键配置贴出来,比较注意的点是,在配置keepalived 时候,记得关闭iptables,否者两边虚IP都会存在,还有自己需要验证下,nginx 停止后keepalived 的检测脚本是否可以检测并重启,以及测试下VIP的漂移是否正常。

三、 kubesphere安装k8s高可用集群

# master环境

# 安装依赖,我这里是3台master 都安装了

yum -y install conntrack socat

# 配置环境变量 ps:不设置默认会从github和google拉取镜像

export KKZONE=cn

# 下载 KubeKey

curl -sfL https://get-kk.kubesphere.io | VERSION=v1.1.1 sh -

chmod +x kk

# 生成配置文件

./kk create config --with-kubernetes v1.20.4 --with-kubesphere v3.1.1config-sameple.yaml

apiVersion: kubekey.kubesphere.io/v1alpha1

kind: Cluster

metadata:

name: sample

spec:

hosts:

- {name: master01, address: 192.168.0.101, internalAddress: 192.168.0.101, user: root, password: ading}

- {name: master02, address: 192.168.0.102, internalAddress: 192.168.0.102, user: root, password: ading}

- {name: master03, address: 192.168.0.103, internalAddress: 192.168.0.103, user: root, password: ading}

- {name: node01, address: 192.168.0.104, internalAddress: 192.168.0.104, user: root, password: ading}

- {name: node02, address: 192.168.0.105, internalAddress: 192.168.0.105, user: root, password: ading}

- {name: node03, address: 192.168.0.106, internalAddress: 192.168.0.106, user: root, password: ading}

roleGroups:

etcd:

- master01

- master02

- master03

master:

- master01

- master02

- master03

worker:

- node01

- node02

- node03

controlPlaneEndpoint:

domain: lb.kubesphere.local

address: "192.168.0.99"

port: 6443

kubernetes:

version: v1.20.4

imageRepo: kubesphere

clusterName: cluster.local

network:

plugin: calico

kubePodsCIDR: 10.233.64.0/18

kubeServiceCIDR: 10.233.0.0/18

registry:

registryMirrors: []

insecureRegistries: []

addons: []

---

apiVersion: installer.kubesphere.io/v1alpha1

kind: ClusterConfiguration

metadata:

name: ks-installer

namespace: kubesphere-system

labels:

version: v3.1.1

spec:

persistence:

storageClass: ""

authentication:

jwtSecret: ""

zone: ""

local_registry: ""

etcd:

monitoring: false

endpointIps: localhost

port: 2379

tlsEnable: true

common:

redis:

enabled: false

redisVolumSize: 2Gi

openldap:

enabled: false

openldapVolumeSize: 2Gi

minioVolumeSize: 20Gi

monitoring:

endpoint: http://prometheus-operated.kubesphere-monitoring-system.svc:9090

es:

elasticsearchMasterVolumeSize: 4Gi

elasticsearchDataVolumeSize: 20Gi

logMaxAge: 7

elkPrefix: logstash

basicAuth:

enabled: false

username: ""

password: ""

externalElasticsearchUrl: ""

externalElasticsearchPort: ""

console:

enableMultiLogin: true

port: 30880

alerting:

enabled: false

# thanosruler:

# replicas: 1

# resources: {}

auditing:

enabled: false

devops:

enabled: false

jenkinsMemoryLim: 2Gi

jenkinsMemoryReq: 1500Mi

jenkinsVolumeSize: 8Gi

jenkinsJavaOpts_Xms: 512m

jenkinsJavaOpts_Xmx: 512m

jenkinsJavaOpts_MaxRAM: 2g

events:

enabled: false

ruler:

enabled: true

replicas: 2

logging:

enabled: false

logsidecar:

enabled: true

replicas: 2

metrics_server:

enabled: false

monitoring:

storageClass: ""

prometheusMemoryRequest: 400Mi

prometheusVolumeSize: 20Gi

multicluster:

clusterRole: none

network:

networkpolicy:

enabled: false

ippool:

type: none

topology:

type: none

openpitrix:

store:

enabled: false

servicemesh:

enabled: false

kubeedge:

enabled: false

cloudCore:

nodeSelector: {"node-role.kubernetes.io/worker": ""}

tolerations: []

cloudhubPort: "10000"

cloudhubQuicPort: "10001"

cloudhubHttpsPort: "10002"

cloudstreamPort: "10003"

tunnelPort: "10004"

cloudHub:

advertiseAddress:

- ""

nodeLimit: "100"

service:

cloudhubNodePort: "30000"

cloudhubQuicNodePort: "30001"

cloudhubHttpsNodePort: "30002"

cloudstreamNodePort: "30003"

tunnelNodePort: "30004"

edgeWatcher:

nodeSelector: {"node-role.kubernetes.io/worker": ""}

tolerations: []

edgeWatcherAgent:

nodeSelector: {"node-role.kubernetes.io/worker": ""}

tolerations: []创建集群

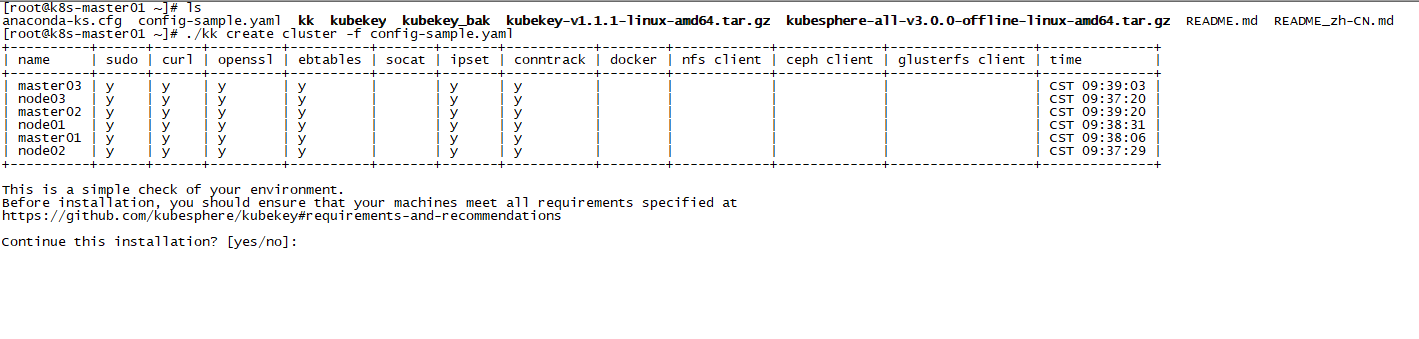

./kk create cluster -f config-sample.yaml

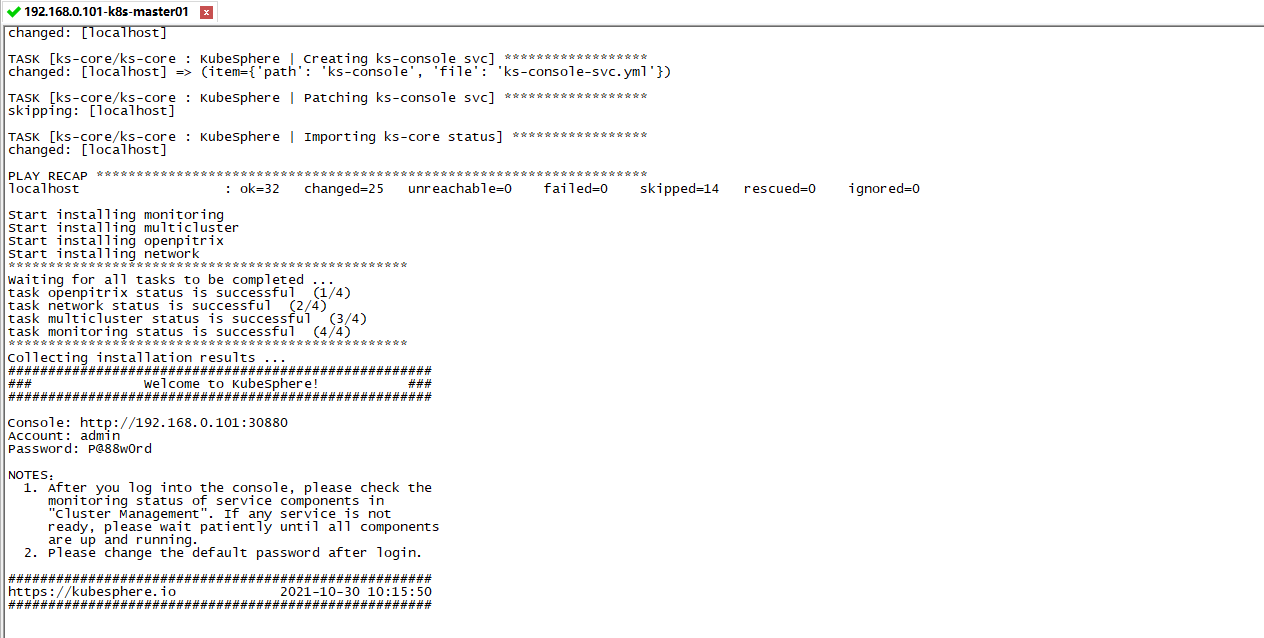

检查安装结果

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f

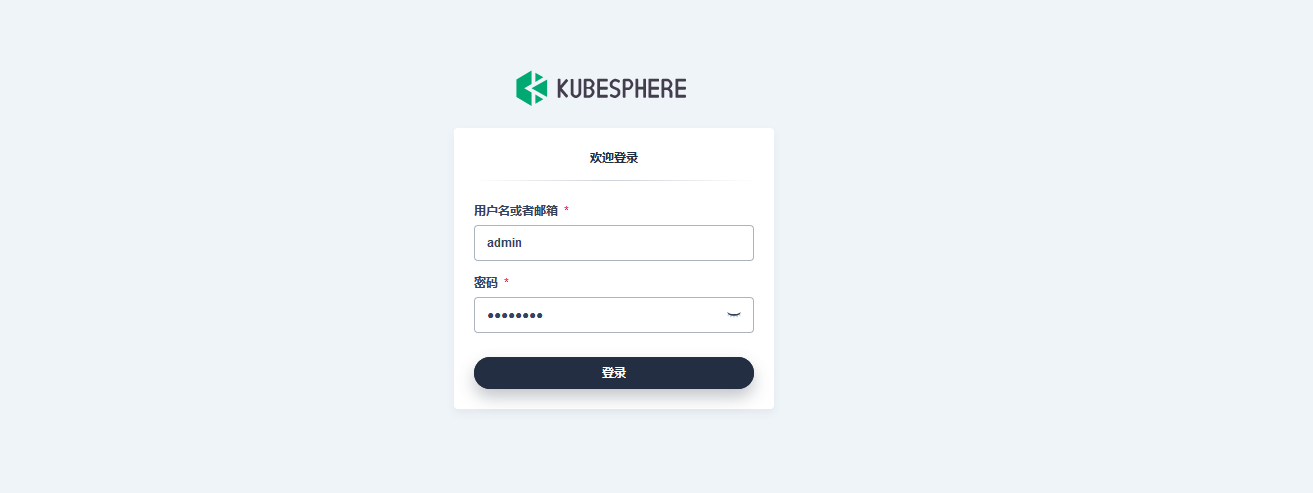

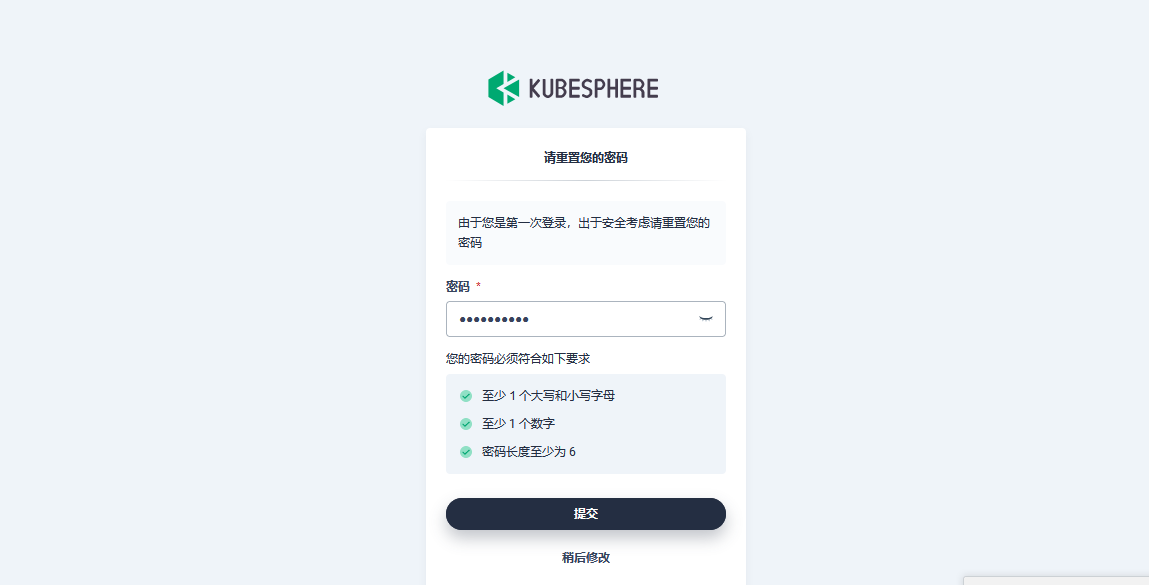

至此,k8s 高可用集群已成功安装!